Why the “AI Eats Itself” Panic Misses the Point

The fear that AI will inevitably collapse by training on its own synthetic output treats a tool like an autonomous actor. The real risk is human incentives—spam, laziness, and the erosion of standards—not some unavoidable self-cannibalizing loop.

Posted by

Related reading

AI Removes the Grind, Not the Value

AI isn’t mainly replacing jobs—it’s stripping away the mechanical effort inside them. As output gets easier, value shifts toward initiation, judgment, taste, and the willingness to be seen.

Total Automation, Total Concentration Isn’t an Equilibrium

The “AI replaces everyone and a tiny elite owns everything” story can’t be a stable end state because capitalism needs buyers and societies need legitimacy. The surplus from automation forces redistribution, coercive patronage, or collapse—and the fight is political, not technical.

When a Correct π Formula Makes a Bad Algorithm

Leibniz’s series for π feels like a cheat code—until you try to turn “nth digit” into a real program with real error bounds and finite precision. This is a walkthrough of how 22/7, slow convergence, and floating-point limits reshape what “calculate π” actually means in code.

The “AI Will Eat Itself” Panic Is Mostly Nonsense

There’s a recurring scare story making the rounds: AI is going to “eat itself” because future models will train on content generated by other AI, the data will get progressively more synthetic, and eventually everything collapses into garbage. The idea is that we’re headed for some kind of informational inbreeding—models copying models copying models until quality degrades beyond repair.

Parts of that fear gesture at something real. But as a whole, the conclusion people jump to—“AI will inevitably poison itself into uselessness”—is complete and utter garbage.

The main reason is simple: AI doesn’t prompt itself. AI doesn’t wake up in the morning and decide to generate a million blog posts, upload them to the internet, and then retrain itself on them out of some self-destructive compulsion. AI does what it’s asked to do. It generates what humans ask it to generate, in the contexts humans place it in, and in response to the incentives humans create.

If there’s an “AI-generated data problem,” it’s not an AI problem. It’s a human behavior and systems problem.

AI Doesn’t Create a World. It Reflects One.

The whole “AI eats itself” frame quietly assumes an autonomous loop:

- AI generates content.

- That content floods the environment.

- AI trains on that environment.

- AI quality decays.

- Repeat until collapse.

But step one is doing far too much work. AI doesn’t generate content by default. It generates content because someone prompts it, or because some product pipeline is designed to produce content at scale.

Even the “worst case” people imagine—AI generating content based on what it “sees around itself”—still depends on human activity. The surrounding world it observes is shaped by humans posting, humans sharing, humans buying, humans clicking, humans rewarding certain outputs.

In either case, human beings are the ones prompting. Human beings are the ones creating the conditions that produce the data. The loop isn’t AI eating itself. It’s people choosing, at scale, to replace certain kinds of work with synthetic output and then acting surprised that the environment becomes more synthetic.

That’s not an inevitability. That’s a set of choices.

The Real Risk Isn’t “AI Collapse.” It’s Incentives + Spam

There is a legitimate concern hiding inside the dramatic framing: when it becomes cheap to generate plausible text, images, and video, the internet can fill up with low-effort sludge. Not because AI is “self-cannibalizing,” but because there’s profit in generating tons of content that looks good enough.

The risk looks like this:

- Search and social incentives reward volume. If traffic can be captured by pumping out thousands of “good enough” pages, someone will do it.

- Platforms struggle to distinguish quality from scale. Moderation and ranking are hard even when humans are the only producers.

- Humans get lazy. People paste outputs without thinking, don’t cite sources, and don’t care about whether anything is actually true—as long as it performs.

That’s not an AI self-destruction scenario. That’s spam. It’s the same old story as SEO farms, engagement bait, and content mills—only now the cost of production is near-zero.

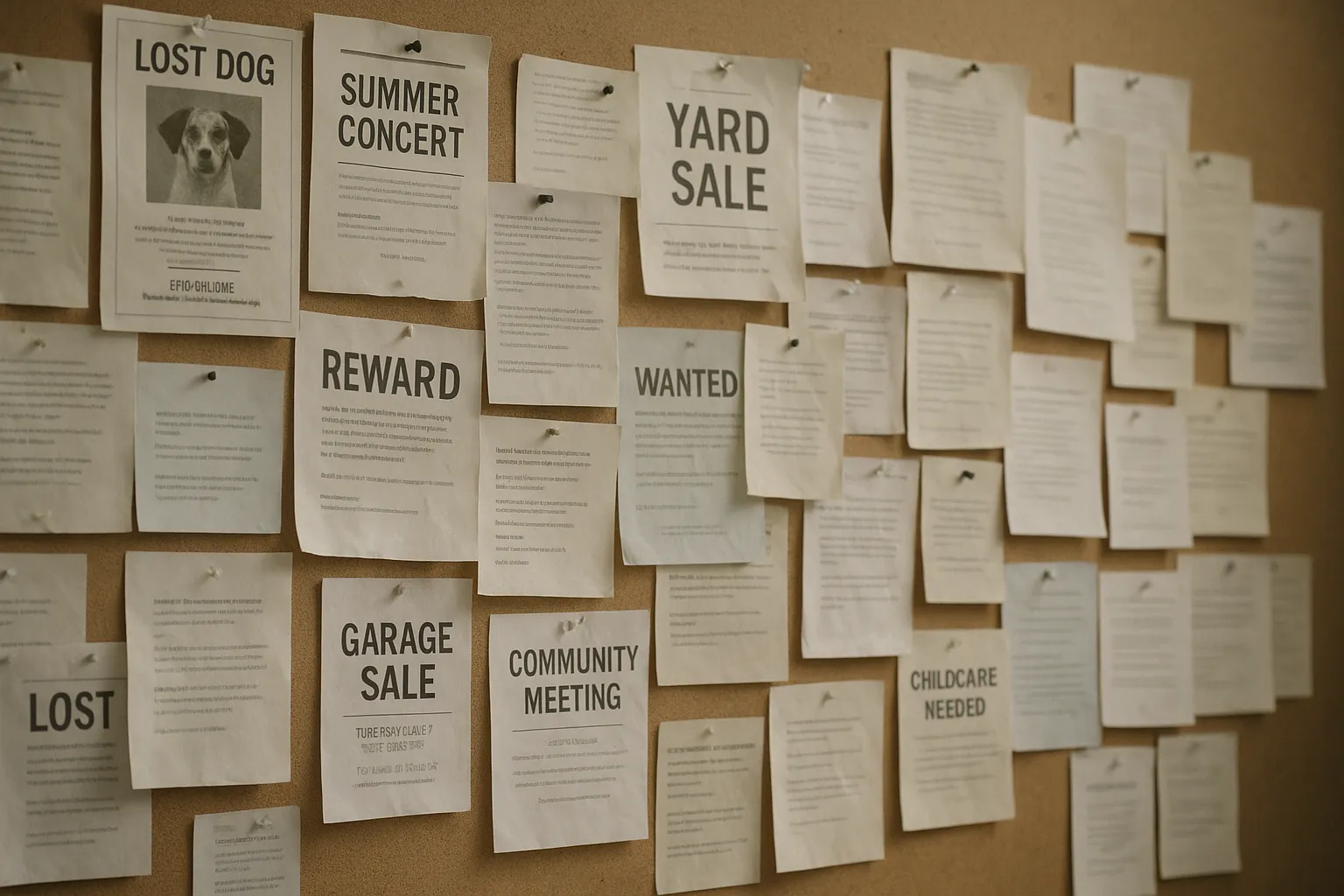

Image credit: Wikimedia Commons

If anything “poisons the well,” it’s the human decision to flood the well.

“Training on AI Data” Isn’t Automatically Toxic

Another sloppy assumption is that “AI-generated” is synonymous with “bad.” It isn’t.

AI-generated text can be:

- High-quality and carefully edited

- Low-quality and completely unedited

- Correct, incorrect, or a mix of both

- Useful, useless, or actively misleading

The label “AI-generated” doesn’t tell you what matters. What matters is the process: who asked for it, what constraints were in place, whether it was checked, whether it was grounded in reality, and whether it was improved by humans who know what they’re doing.

If a human uses AI as a drafting partner and then applies judgment—rewrites, verifies, adds context, removes fluff—that output is not “garbage.” It’s assisted writing. If someone uses AI to generate 400 articles overnight and posts them raw, sure, it’s probably trash. But that’s a production choice, not a law of nature.

The fear narrative smashes all of this into one category and pretends the future is predetermined.

Humans Are the Source of Novelty (And Always Will Be)

The deeper claim behind “AI eats itself” is that AI will run out of fresh, real information. But the world doesn’t stop producing novelty.

As long as human beings exist, we keep generating new data:

- new experiences

- new research

- new art

- new arguments

- new mistakes

- new discoveries

- new slang

- new politics

- new products

- new tragedies and triumphs

That’s not romantic. It’s just true. Humans keep doing things, recording things, reacting to things, and changing the environment.

AI-generated content doesn’t replace reality. It’s commentary, synthesis, imitation, brainstorming, and sometimes hallucination. Reality keeps happening. People keep talking about it. People keep writing about it. People keep filming it. That pipeline of “fresh input” doesn’t vanish unless humans vanish.

So the statement “AI-generated data is never going to be garbage” is too broad if you read it literally—obviously humans can generate garbage with AI. But the core point stands: AI isn’t headed toward unavoidable degeneration as long as humans remain in the loop producing new signals and selecting what gets amplified.

The Actual Failure Mode: Humans Stop Caring

If there’s a scenario where things get ugly, it’s not because AI trains on AI. It’s because humans decide they don’t need standards anymore.

The failure mode looks like:

- companies replace writers, editors, and researchers with bulk generation

- institutions stop verifying

- people share what flatters their worldview, not what’s true

- platforms reward speed and emotion over accuracy

- the public loses the ability (or desire) to tell what’s real

That’s a cultural and economic failure. It’s not “AI starving itself.” It’s people outsourcing responsibility.

If you want to be worried about something, be worried about the human tendency to accept plausibility as truth when it’s convenient. AI makes that temptation cheaper and faster.

AI Doesn’t Have Agency. Systems Do.

Saying “AI will eat itself” makes AI sound like a creature with instincts. It isn’t. It’s a tool inside systems: products, markets, institutions, and social networks.

So ask better questions:

- Who is deploying AI, and why?

- What incentives are shaping output quality?

- What happens when the cheapest content wins?

- Where are humans applying judgment, and where are they giving up?

- What gets rewarded: accuracy, originality, entertainment, outrage, or quantity?

Once you frame it that way, the doom story loses its mystical vibe. We’re not watching an organism decay. We’re watching people design environments. Some environments will produce garbage. Others will produce incredible work.

AI isn’t destiny. It’s leverage.

“AI Will Eat Itself” Is a Story People Like

There’s also a psychological reason this narrative keeps popping up: it’s comforting in a weird way. It suggests a built-in limit. It implies that the AI boom will self-correct without anyone needing to do anything. It’s a story where the problem solves itself.

But technology doesn’t have a moral arc. It doesn’t automatically punish people for being lazy. It just scales whatever you feed into it—good processes or bad ones.

If you build a system that rewards low-effort synthetic noise, you’ll get low-effort synthetic noise. If you build a system that rewards originality, verification, and craftsmanship, AI can amplify that too.

Conclusion

The idea that AI will inevitably “eat itself” is mostly a category error: it treats a tool like an autonomous actor. AI generates what humans prompt, what humans deploy, and what humans incentivize. The real question isn’t whether models will collapse into garbage—it’s whether we’re going to keep caring about quality, truth, and judgment while using a machine that can produce endless plausibility on demand.